We trained a CodeBERT model on a small dataset of manually selected reentrancy vulnerability examples, outperformed every static analysis tool at the time and found a couple real-world cases where DeFi projects and DAO admins could withdraw all tokens from the contract.

Reentrancy vulnerabilities: a short recap

For a broad overview of reentrancy attacks, check out this resource.

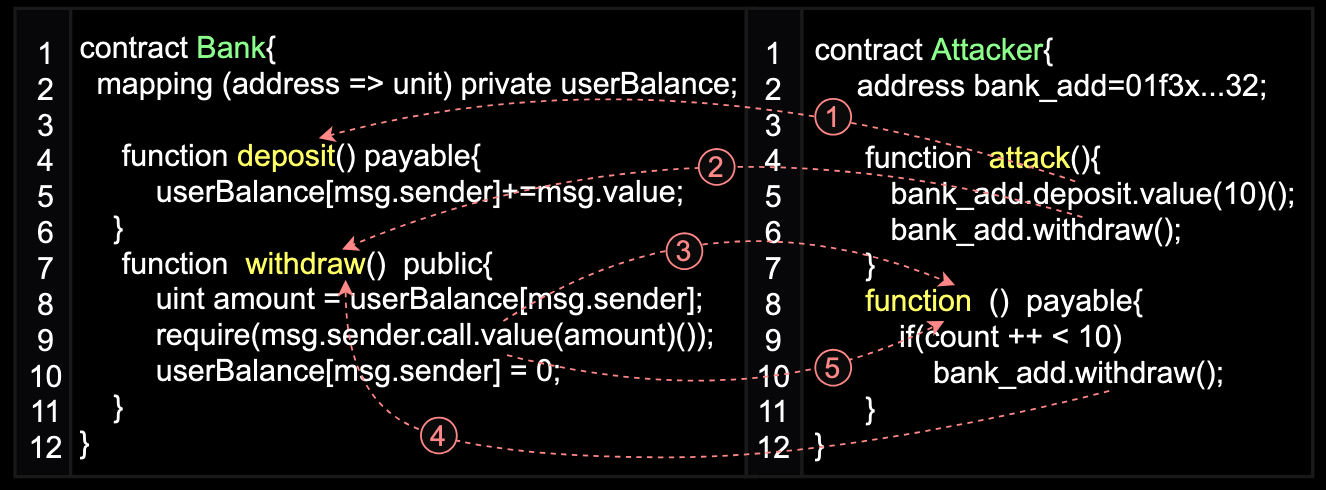

A classic form of reentrancy attack occurs when malicious actors exploit a loophole to repeatedly withdraw funds from a victim contract until it is completely drained.

This attack type was first disclosed in 2016 during The DAO hack, where attackers siphoned approximately $60 million worth of Ether, making it one of the most severe incidents in Ethereum history at the time.

The vulnerability occurs when a smart contract's function contains a call to an arbitrary address before critical state updates. This allows the function to be called multiple times during a single transaction before the initial call completes. In such cases, an attacker can create a malicious contract that exploits this flaw by recursively invoking the vulnerable function.

One of the things that makes reentrancy possible lies in Ethereum’s fallback mechanism. When the victim contract sends Ether to the attacker’s contract, it automatically triggers the fallback function in the attacker's contract. If this fallback function contains malicious code, it can immediately call back into the victim contract’s withdrawal function before the previous execution completes.

While "true" reentrancy vulnerabilities are quite rare nowadays, a popular and still quite dangerous kind of real-world vulnerabilities is a reentrancy which requires some trusted role – e.g. admin, dev or owner.

These are often overlooked and considered low-risk because they require access to member's account, which is "safe" or "trusted".

Our approach to training

Using public smart contract vulnerability dataset

For our study, we used the SmartBugs Wild dataset , the largest labeled dataset of real-world open-source smart contracts, with labels generated by different vulnerability detection tools.

In total, the dataset contains 428,337 analyses performed by nine automated tools.

Moreover, the authors further categorized the identified vulnerabilities into distinct patterns, providing a more structured version of the dataset, perfect for LLM training.

We decided to find and classify vulnerabilities in functions, not in whole contracts, for a few simple reasons.

First, looking at functions gives us enough information to spot all the types of vulnerabilities (in most cases) we’re interested in. Anything more would be extra information we don’t need.

Second, many of the models we might want to use have limited context windows, e.g. the CodeBERT model that we ended up using has a limit of 512 tokens.

Before training the model we performed some additional data processing steps: removed all comments from the code, deleted any personally identifiable information (PII), extracted the functions from each contract, and mapped them to their respective vulnerabilities as detected by security tools. The label for each function corresponded to the type of vulnerability pattern identified. This resulted in a multiclass classification task.

Then, we narrowed the focus to detecting only the reentrancy vulnerability and evaluated our model's performance on this specific task. The resulting metrics were relatively good compared to each individual tool whose data was used for training:

- Precision: 0.61

- Recall: 0.69

- F1-Score: 0.65

However, there is a major problem with the SmartBugs dataset that we'd seen in depth while manually analyzing the successfully detected cases - since the dataset is built on top of static analysis tools' output, their limitations, false positives and negatives all went into the training of the model.

The metrics above show how well our model got in predicting the analysis tools output, but don't really say anything about precision and recall on actual real-world vulnerabilities.

Few-shot learning approach

Keeping in mind that a dataset labeled by tooling cannot possibly provide quality significantly better than the tools themselves, we decided to try an alternative approach. We researched the few-shot learning method, where a model is trained on a small number of examples, which can be obtained by manually labeling smart contracts (by a professional security auditor).

Since manually labeling the dataset requires significant effort, we ended up with a relatively small dataset: 500 functions, out of which 100 were confirmed to have a reentrancy vulnerability. Then the dataset was then divided into a training and validation dataset, depending on the number of objects needed for the training dataset.

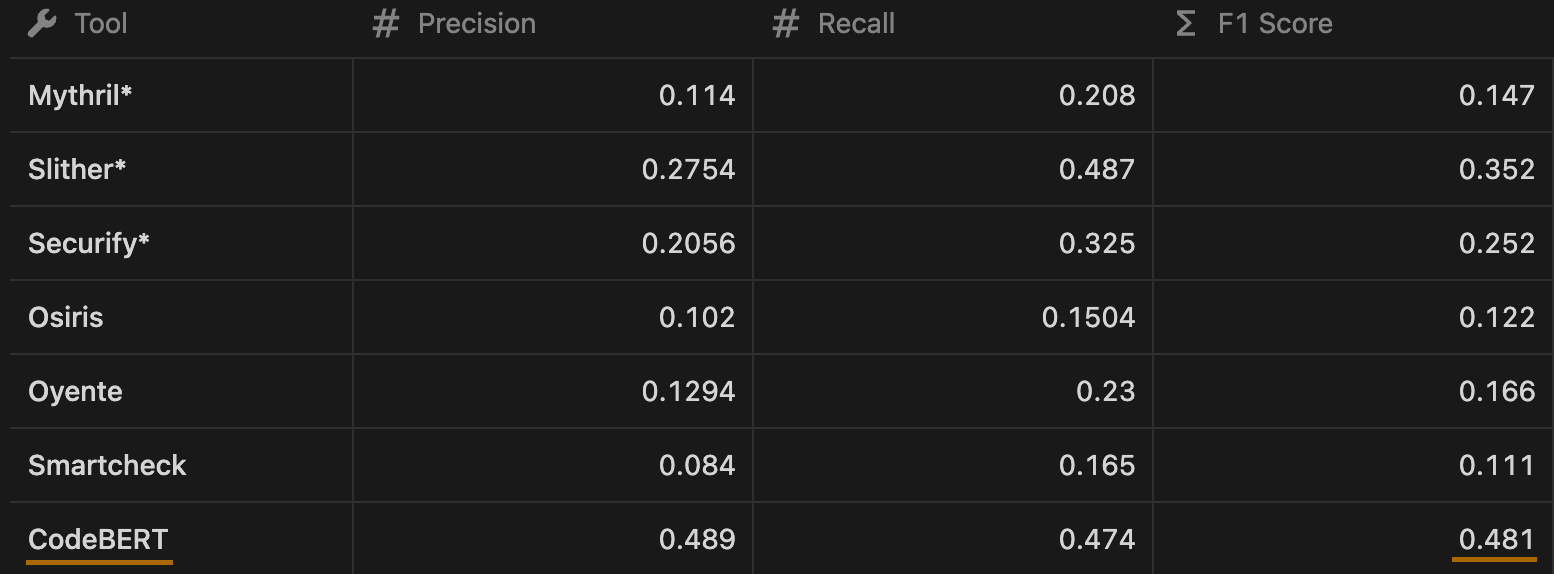

The results of our few-shot learning approach indicate that our model outperforms all the tools when trained with 64 real world vulnerable examples - the CodeBERT model achieves the highest precision (0.4167) and F1-Score (0.4).

For the tools with the * sign we tried various detection patterns and selected the best performing ones.

While a 0.48 F1 score is still far from perfect, this model quickly became one of the essential tools in our bundle.

It is very helpful in highlighting potential reentrancy vulnerabilities in large repositories. During our audits it provides additional assurance that we haven't missed anything.

Real-world cases

Automated reentrancy analysis is one of our many scan tools that we apply to any project that we're looking into. It's not the most common vulnerability nowadays, but it's consequences could be extremely severe if exploited.

Connext (now Everclear)

Connext was one of the first and biggest protocols which had (and still has, by the way) a reentrancy issue discovered by our model - with the $118.76m TVL at the time of writing this post.

Vulnerability in the SwapAdminFacet.sol contract allows any member with admin or owner privileges to repeatedly drain the protocol contract until it's bankrupt:

function removeSwap(bytes32 _key) external onlyOwnerOrAdmin {

uint256 numPooledTokens = s.swapStorages[_key].pooledTokens.length;

if (numPooledTokens == 0) revert SwapAdminFacet__removeSwap_notInitialized();

if (!s.swapStorages[_key].disabled) revert SwapAdminFacet__removeSwap_notDisabledPool();

if (s.swapStorages[_key].removeTime > block.timestamp) revert SwapAdminFacet__removeSwap_delayNotElapsed();

for (uint256 i; i < numPooledTokens; ) {

IERC20 pooledToken = s.swapStorages[_key].pooledTokens[i];

if (s.swapStorages[_key].balances[i] > 0) {

pooledToken.safeTransfer(msg.sender, s.swapStorages[_key].balances[i]);

// ^- in case of malicious token can cause reentrancy which will repeat the transfers of previous tokens

}

delete s.tokenIndexes[_key][address(pooledToken)]; // <- this doesn't prevent reentrancy

unchecked {

++i;

}

}

_withdrawAdminFees(_key, msg.sender);

delete s.swapStorages[_key]; // <- removes data from storage after external calls

emit SwapRemoved(_key, msg.sender);

}Here is the way how an admin can steal arbitrary ERC-20 token A from the contract

- Deploy malicious token M, which has callback in transfer.

- Initialize a swap with tokens [A, M] (in that order).

- Add liquidity to the swap, resulting in the swap balance greater than zero.

- Disable the swap, wait 7 days.

- Deploy an attacker contract which will call removeSwap, assign it admin role.

- Execute reentrancy and withdraw token A from contract.

We reported this problem to Connext a while ago, and from their response we'd figured that the protocol accepted this risk – same as many projects which ignore threats like that. In our opinion, this kind of ignorance is unacceptable for big projects with users money.

The most popular named reason for not addressing these is that those attacks are "extremely unlikely and therefore don't create high risks".

However, what all projects and their teams should remember is that most protocols that became a victim of a substantial attack (and here we're talking about potentially draining the whole TVL) don't survive it. So even if the risk seems low, the consequences of an unaddressed risk might be fatal - both for the project team and its users.

Aladdin DAO

Alladin DAO (old project) had a similar issue which was discovered while performing an automated scan of project's contracts:

In the StakeDAOVaultBase contract there was a reentrancy vulnerability that allows owner to withdraw staking token using takeWithdrawFee function.

function takeWithdrawFee(address _recipient) external onlyOwner {

uint256 _amount = withdrawFeeAccumulated;

if (_amount > 0) {

IConcentratorStakeDAOLocker(stakeDAOProxy).withdraw(gauge, stakingToken, _amount, _recipient); // <-- external call

withdrawFeeAccumulated = 0; // <-- state changes after external call

emit TakeWithdrawFee(_amount);

}

}The aladdin-v3-contract isn't archived and there were commits last week.

There was and still is one other reentrancy vector in the StakeDAOLockerProxy.sol.

Here, if the current token balance is less than the desired withdrawal amount, the function interacts with a potentially untrusted contract _gauge which can be manipulated to exploit the reentrancy vulnerability:

function withdraw(

address _gauge,

address _token,

uint256 _amount,

address _recipient) override onlyOperator(_gauge) {

uint256 _balance = IERC20Upgradeable(_token).balanceOf(address(this));

if (_balance < _amount) {

IStakeDAOGauge(_gauge).withdraw(_amount - _balance); // <-- possible callback

}

IERC20Upgradeable(_token).safeTransfer(_recipient, _amount);

}Given the lack of reentrancy protection, a malicious gauge implementation could, in theory, reenter the takeWithdrawFee function, allowing an owner to drain funds from the contract.

Updating withdrawFeeAccumulated before executing the withdraw function would seemingly solve the problem here.

Admins should not be able to withdraw all tokens

In both cases, the projects declined the vulnerabilities as highly unlikely and not severe enough, since they require admin control.

However, this approach to security and trust in decentralized projects puts its users and token holders to risk of loosing everything they have invested in the protocol, stored in the project's smart contract.

Those claiming that "admin privileges are supposed to be high" and "those mentioned interfaces can only be invoked by certain members, which is why it's not a problem for now" should take a look at dozens of examples of protocol exploits by a malicious project member or by an attacker who compromised admin's account:

Needless to say that not Decentralized Finance projects nor the Decentralized Autonomous Organizations should give owners, developers or any other members privileges that would allow to drain all locked tokens with a relatively simple technique.

The end

If you're interested in the dataset we used or would like us to run the model on your project`s code – please reach out in Telegram or by email: contact@unvariant.io